Request A Quote

- UPC:

- 7.51493E+11

- Availability:

- 2-5+ Weeks - ETA

Purchase Protection

Every Order is Protected By BuySafe

Our commitment to sustainability ensures that every purchase supports responsible operations and a healthier planet.

SSL Secure Payment

We make sure your orders are processed as quickly as possible - stocked products are shipped next business day - Vendor direct ship products are processed directly with vendors with vendor leadtime.

Express Shipping

Price Match Guarantee

Your payment is secure

Your privacy and security are our top priority. Our advanced encrypted payment system protects your information during every transaction.

Get expert help

Our team of experienced professionals is always ready to help.

Product Details

-

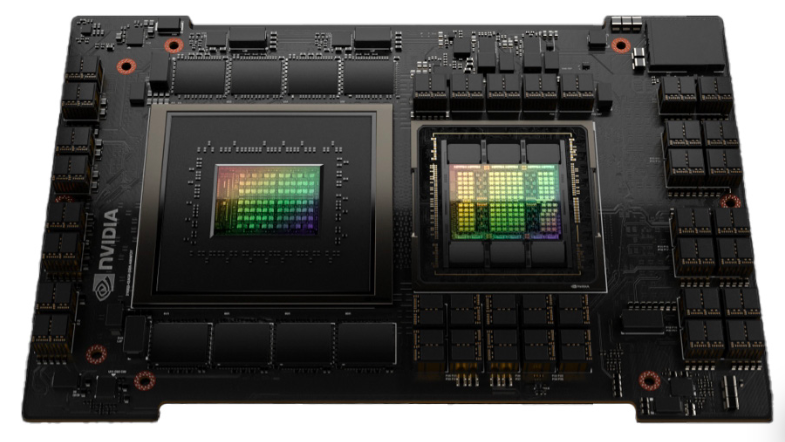

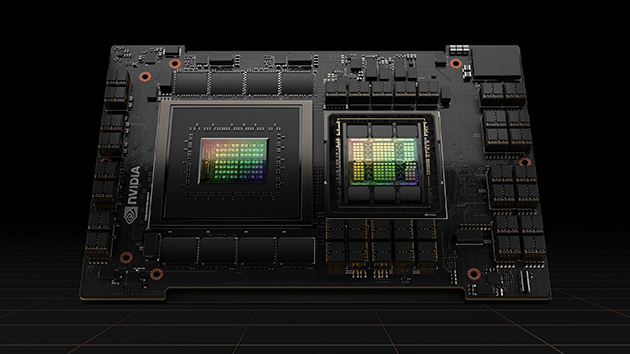

An order-of-magnitude leap For accelerated computing.

Tap into unprecedented performance, scalability, And security For every workload With The Nvidia H100 Tensor Core GPU. With Nvidia ® NVLink® Switch System, up To 256 H100s can be connected To accelerate exascale workloads, along With a dedicated Transformer Engine To solve trillion-parameter language models. H100’s combined technology innovations can speed up large language models by an incredible 30X over The previous generation To deliver industry-leading conversational AI.

-

Ready For Enterprise AI?

Enterprise adoption of AI Is now mainstream, And organizations need end-to-end, AI-ready infrastructure that will accelerate them into this new era.

H100 For mainstream servers comes With a five-year subscription, including Enterprise support, To The Nvidia AI Enterprise Software suite, simplifying AI adoption With The highest performance. This ensures organizations have Access To The AI frameworks And tools they need To build H100-accelerated AI workflows such as AI chatbots, recommendation engines, vision AI, And more.

-

Securely accelerate workloads from Enterprise To exascale.

Transformational AI training.

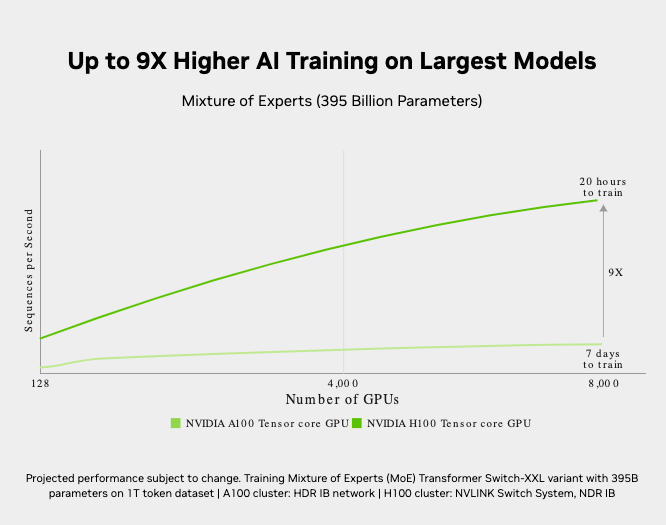

H100 features fourth-generation Tensor Cores And The Transformer Engine With FP8 precision that provides up To 9X faster training over The prior generation For mixture-of-experts (MoE) models. The combination of fourth-generation NVlink, which offers 900 gigabytes Per second (GB/s) of GPU-to-GPU interconnect; NVLINK Switch System, which accelerates communication by every Gpu across nodes; PCIe Gen5; And Nvidia Magnum IO™ Software delivers efficient scalability from small enterprises To massive, unified Gpu clusters.

Deploying H100 GPUs at data center scale delivers outstanding performance And brings The next generation of exascale high-performance computing (HPC) And trillion-parameter AI within The reach of all researchers.

-

Real-time deep learning inference.

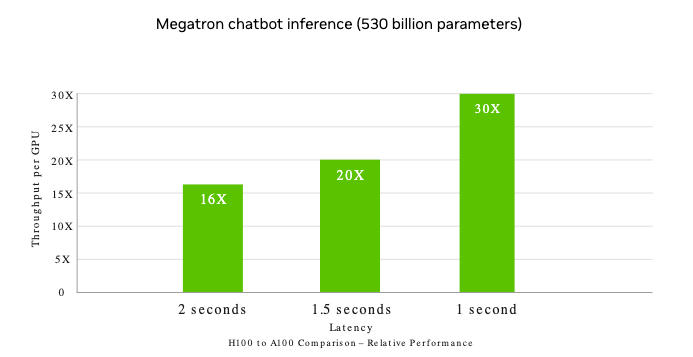

Up To 30X higher AI inference performance on The largest models.

AI solves a wide array of business challenges, using an equally wide array of neural networks. A great AI inference accelerator has To not only deliver The highest performance but also The versatility To accelerate these networks.

H100 further extends Nvidia ’s market-leading inference leadership With several advancements that accelerate inference by up To 30X And deliver The lowest latency. Fourth-generation Tensor Cores speed up all precisions, including FP64, TF32, FP32, FP16, And INT8, And The Transformer Engine utilizes FP8 And FP16 together To reduce memory usage And increase performance while still maintaining accuracy For large language models.

-

Exascale high-performance computing.

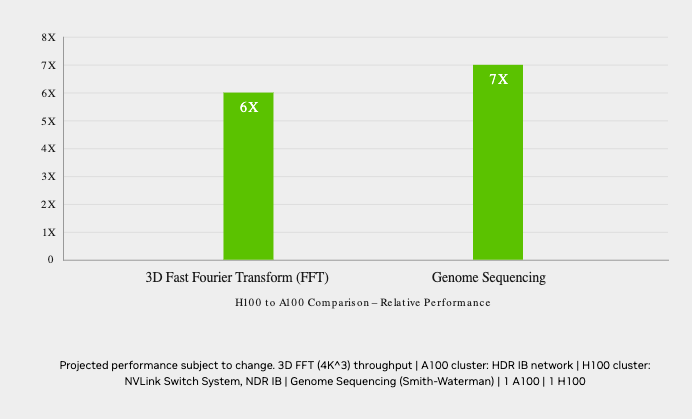

Up To 7X higher performance For HPC applications.

The Nvidia data center platform consistently delivers performance gains beyond Moore’s Law. And H100’s new breakthrough AI capabilities further amplify The power of HPC+AI To accelerate time To discovery For scientists And researchers working on solving The world’s most important challenges.

H100 triples The floating-point operations Per second (FLOPS) of double-precision Tensor Cores, delivering 60 teraFLOPS of FP64 computing For HPC. AI-fused HPC applications can leverage H100’s TF32 precision To achieve one petaFLOP of throughput For single-precision, matrix-multiply operations, With zero code changes.

H100 also features DPX instructions that deliver 7X higher performance over Nvidia A100 Tensor Core GPUs And 40X speedups over traditional dual-socket CPU-only servers on dynamic programming algorithms, such as Smith-Waterman For DNA sequence alignment.

-

Accelerated

data analytics.

Data analytics often consumes The majority of time in AI application development. Since large datasets are scattered across multiple servers, scale-out solutions With commodity CPU-only servers get bogged down by a lack of scalable computing performance.

Accelerated servers With H100 deliver The compute power—along With 3 terabytes Per second (TB/s) of memory bandwidth Per Gpu And scalability With NVLink And NVSwitch—to tackle data analytics With high performance And scale To support massive datasets. Combined With Nvidia Quantum-2 Infiniband, The Magnum IO software, GPU-accelerated Spark 3.0, And Nvidia RAPIDS™, The Nvidia data center platform Is uniquely able To accelerate these huge workloads With unparalleled levels of performance And efficiency.

-

Enterprise-ready

utilization.

IT managers seek To maximize utilization (both peak And average) of compute resources in The data center. They often employ dynamic reconfiguration of compute To right-size resources For The workloads in use.

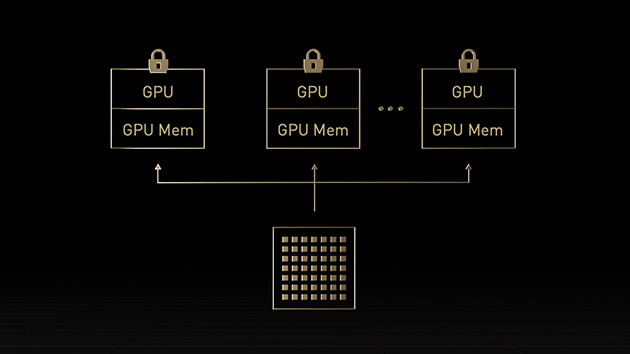

Second-generation Multi-Instance Gpu (MIG) in H100 maximizes The utilization of each Gpu by securely partitioning it into as many as seven separate instances. With confidential computing support, H100 allows secure end-to-end, multi-tenant usage, ideal For cloud service provider (CSP) environments.

H100 With MIG lets infrastructure managers standardize their GPU-accelerated infrastructure while having The flexibility To provision Gpu resources With greater granularity To securely provide developers The right amount of accelerated compute And optimize usage of all their Gpu resources.

-

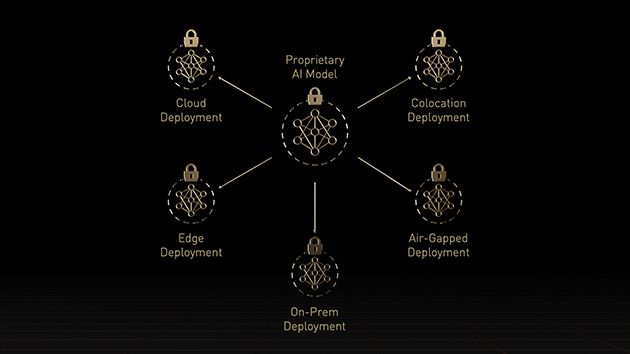

Built-in

confidential computing.

Today’s confidential computing solutions are CPU-based, which Is too limited For compute-intensive workloads like AI And HPC. Nvidia Confidential Computing Is a built-in security feature of The Nvidia Hopper™ architecture that makes H100 The world’s first accelerator With confidential computing capabilities. Users can protect The confidentiality And integrity of their data And applications in use while accessing The unsurpassed acceleration of H100 GPUs. It creates a hardware-based trusted execution environment (TEE) that secures And isolates The entire workload running on a single H100 GPU, multiple H100 GPUs within a node, or individual MIG instances. GPU-accelerated applications can run unchanged within The TEE And don’t have To be partitioned. Users can combine The power of Nvidia Software For AI And HPC With The security of a hardware root of trust offered by Nvidia Confidential Computing.

-

Unparalleled performance for

large-scale AI And HPC.

The Hopper Tensor Core Gpu will power The Nvidia Grace Hopper CPU+GPU architecture, purpose-built For terabyte-scale accelerated computing And providing 10X higher performance on large-model AI And HPC. The Nvidia Grace CPU leverages The flexibility of The Arm® architecture To create a CPU And server architecture designed from The ground up For accelerated computing. The Hopper Gpu Is paired With The Grace CPU using Nvidia ’s ultra-fast chip-to-chip interconnect, delivering 900GB/s of bandwidth, 7X faster than PCIe Gen5. This innovative design will deliver up To 30X higher aggregate system memory bandwidth To The Gpu compared To today's fastest servers And up To 10X higher performance For applications running terabytes of data.

Supercharge Large Language Model Inference

For LLMs up To 175 billion parameters, The PCIe-based H100 NVL With NVLink bridge utilizes Transformer Engine, NVLink, And 188GB HBM3 memory To provide optimum performance And easy scaling across any data center, bringing LLMs To mainstream. Servers equipped With H100 NVL GPUs increase GPT-175B model performance up To 12X over Nvidia DGX™ A100 systems while maintaining low latency in power-constrained data center environments.

Product Specifications

| Form Factor | H100 SXM | H100 PCIe | H100 NVL1 |

|---|---|---|---|

| FP64 | 34 teraFLOPS | 26 teraFLOPS | 68 teraFLOPs |

| FP64 Tensor Core | 67 teraFLOPS | 51 teraFLOPS | 134 teraFLOPs |

| FP32 | 67 teraFLOPS | 51 teraFLOPS | 134 teraFLOPs |

| TF32 Tensor Core | 989 teraFLOPS2 | 756 teraFLOPS2 | 1,979 teraFLOPs2 |

| BFLOAT16 Tensor Core | 1,979 teraFLOPS2 | 1,513 teraFLOPS2 | 3,958 teraFLOPs2 |

| FP16 Tensor Core | 1,979 teraFLOPS2 | 1,513 teraFLOPS2 | 3,958 teraFLOPs2 |

| FP8 Tensor Core | 3,958 teraFLOPS2 | 3,026 teraFLOPS2 | 7,916 teraFLOPs2 |

| INT8 Tensor Core | 3,958 TOPS2 | 3,026 TOPS2 | 7,916 TOPS2 |

| GPU memory | 80GB | 80GB | 188GB |

| GPU memory bandwidth | 3.35TB/s | 2TB/s | 7.8TB/s3 |

| Decoders | 7 NVDEC 7 JPEG | 7 NVDEC 7 JPEG | 14 NVDEC 14 JPEG |

| Max thermal design power (TDP) | Up To 700W (configurable) | 300-350W (configurable) | 2x 350-400W (configurable) |

| Multi-Instance GPUs | Up To 7 MIGS @ 10GB each | Up To 14 MIGS @ 12GB each | |

| Form factor | SXM | PCIe dual-slot air-cooled | 2x PCIe dual-slot air-cooled |

| Interconnect | NVLink: 900GB/s PCIe Gen5: 128GB/s | NVLink: 600GB/s PCIe Gen5: 128GB/s | NVLink: 600GB/s PCIe Gen5: 128GB/s |

| Server options | Nvidia HGX H100 Partner And Nvidia -Certified Systems™ With 4 or 8 GPUs Nvidia DGX H100 With 8 GPUs | Partner And Nvidia -Certified Systems with 1–8 GPUs | Partner And Nvidia -Certified Systems with 2-4 pairs |

| Nvidia AI Enterprise | Add-on | Included | Included |

Shipping & Return

Return Policy

You need to have a Return Authorization (RA) number before returning any products to HSSL Technologies.

For a hard copy of this form and a shipping label, please include your request in the form below.

You can also request an RA online by submitting an RA Request via email. Once you have provided HSSL Technologies with the appropriate information, we will contact you with an assigned RA number.

Defective or DOA Products

DOA or defective products are returned for REPLACEMENT ONLY with the same product/model. Deviations from this policy may result in a 20% Returns Processing Service Charge.

- Defective products must be in the original factory carton with all original packing materials.

- The Return Authorization number must be on the shipping label, not the carton. Please do not write on the carton.

- Returns must be shipped freight prepaid. Products received freight collect, without an RA number, or not approved for return will be refused.

- Return Authorization numbers are valid for fourteen (14) days only. Products returned after expiration will be refused.

- HSSL credits are based on the purchase price or current price, whichever is lower.

General Return Terms

You may return most new, unopened items within 30 days of delivery for a full refund. We will also pay return shipping costs if the return is a result of our error (e.g., you received an incorrect or defective item).

You should expect to receive your refund within approximately six weeks of giving your package to the return shipper. This period includes:

- 5–10 business days for us to receive your return from the shipper

- 3–5 business days for us to process your return

- 5–10 business days for your bank to process the refund

If you need to return an item, please Contact Us with your order number and product details. We will respond quickly with instructions for returning items from your order.

Shipping Policy

We can ship to virtually any address in the world. Note that there are restrictions on some products, and certain products cannot be shipped to international destinations.

When you place an order, we will estimate shipping and delivery dates based on the availability of your items and the shipping options you select. Depending on the provider, estimated shipping dates may appear on the shipping quotes page.

Please also note that shipping rates for many items are weight-based. The weight of each item can be found on its product detail page. To reflect the policies of the shipping companies we use, all weights will be rounded up to the next full pound.

Has my order shipped?

Click the "My Account" link at the top right hand side of our site to check your orders status.

How do I change quantities or cancel an item in my order?

Click the "My Account" link at the top right hand side of our site to view orders you have placed. Then click the "Change quantities / cancel orders" link to find and edit your order. Please note that once an order has begun processing or has shipped, the order is no longer editable.

How do I track my order?

Click the "My Account" link at the top right hand side of our site to track your order.

My order never arrived.

Click the "My Account" link at the top right hand side of our site to track your order status. Be sure that all of the items in your order have shipped already. If you order displays your Package Tracking Numbers, check with the shipper to confirm that your packages were delivered. If your packages each show a status of "delivered", please contact customer service for assistance.

An item is missing from my shipment.

Click the "My Account" link at the top right hand side of our site to track your order status. Be sure that all of the items in your order have shipped already. If you order displays your Package Tracking Numbers, check with the shipper to confirm that your packages were delivered. If your packages each show a status of "delivered", please contact customer service for assistance.

My product is missing parts.

Click the "My Account" link at the top right hand side of our site to track your order status. Be sure that all of the items in your order have shipped already. If you order displays your Package Tracking Numbers, check with the shipper to confirm that your packages were delivered. If your packages each show a status of "delivered", please contact customer service for assistance.

When will my backorder arrive?

Backordered items are those which our suppliers are unable to predict when they will have more in stock, but as soon as they do, we will be able to ship the item to you.

Warranty

Why Choose HSSL?

We connect businesses with the right technology solutions to power growth, security, and efficiency.

Expertise

Backed by years of industry knowledge, our specialists understand the challenges modern businesses face.

Partnerships

We collaborate with top-tier technology providers to bring you innovative & high-performance products.

End-to-End Support

Our dedicated support ensures your systems stay optimized, secure, and ready for what’s next.

Scalable Solutions

Our adaptive approach ensures your IT infrastructure evolves seamlessly as your business expands.

Expert in IT & Security Solutions

At HSSL, we specialize in delivering cutting-edge IT infrastructure, cloud, and cybersecurity services that empower businesses to thrive in a digital-first world. Our trusted professionals provide reliable insights on:

-

Digital Transformation Strategy

Empowering businesses to adapt and grow through modern, secure, and scalable digital infrastructure.

-

Advanced Cybersecurity

Protecting your organization from digital threats with proactive monitoring and smart security frameworks.

-

Cloud & Infrastructure Management

Optimizing cloud performance and IT operations for seamless scalability and improved business agility.

-

Data Backup & Recovery

Ensuring business continuity through secure data management, automated backups, and rapid recovery solutions.

-

Network & Endpoint Solutions

Delivering secure, high-performance networking systems that keep your business connected and efficient.

-

Technology Consulting

Guiding organizations toward smarter IT investments and solutions that align with their long-term goals.