Posted by Charu Chaubal on Dec 18th 2024

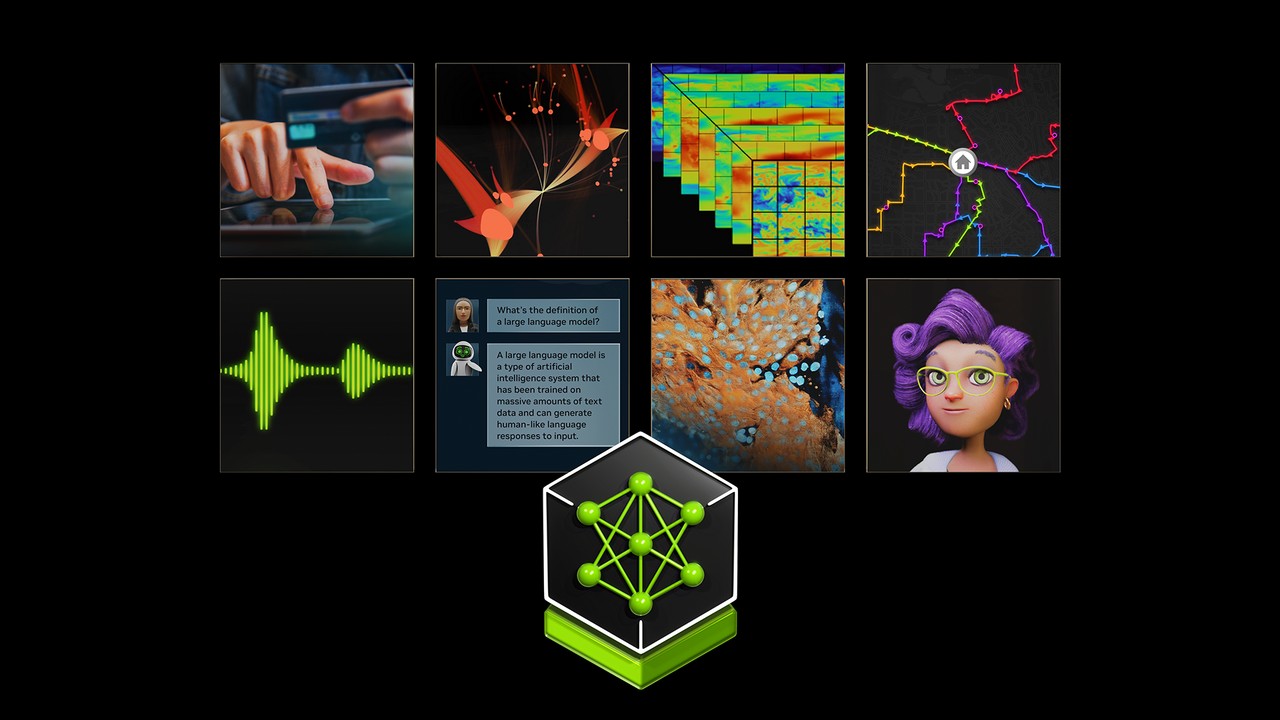

AI agents are emerging as the newest way for organizations to increase efficiency, improve productivity, and accelerate innovation. These agents are more advanced than prior AI applications, with the ability to autonomously reason through tasks, call out to other tools, and incorporate both enterprise data and employee knowledge to produce valuable business outcomes. They’re being embedded into applications customized for each organization’s needs.

The latest release of NVIDIA AI Enterprise includes several new features that help make AI agents more secure, stable, and easier to deploy.

Simplified management of AI agent pipelines

The newly launched NVIDIA NIM Operator simplifies the deployment and management of NIM microservices used to deploy AI pipelines on Kubernetes. NIM Operator automates the deployment of AI pipelines and enhances performance with capabilities such as intelligent model pre-caching for lower initial inference latency and faster autoscaling.

You can choose to autoscale based on CPU, GPU, or NIM-specific metrics, such as NIM max requests, KVcache, and so on.

It also simplifies the upgrade process by providing easy rolling upgrades. Change the version number of the NIM microservice and the NIM Operator updates the deployments in the cluster.

NVIDIA now offers the following deployment paths to deploy NIM microservices for production AI pipelines:

- Helm

- KServe

- NIM Operator

Security and API stability for AI models

NVIDIAAI Enterprise includes monthly feature branch releases for AI and data science software which contain top-of-tree software updates and are ideal for AI developers who want the latest features.

This software is maintained by NVIDIA for one month until the next version is released, and available security fixes are applied before each release. Although this is great for customers who want to stay on the leading edge with the newest capabilities, there’s no guarantee that APIs will not change from month to month. This can make it challenging to build enterprise solutions that need to be both secure and reliable over time, as developers may need to adjust applications after an update.

To address this need, NVIDIA AI Enterprise also includes production branches of AI software. Production branches ensure API stability and regular security updates and are meant for deploying AI in production when stability is required. Production branches are released every 6 months and have a 9-month lifecycle.

Throughout the 9-month lifecycle of each production branch, NVIDIA continuously monitors critical and high common vulnerabilities and exposures (CVEs) and releases monthly security patches. By doing so, the AI frameworks, libraries, models, and tools included in NVIDIA AI Enterprise can be updated for security fixes while eliminating the risk of breaking an API.

The new release is expected to add these NIM microservices to production branches:

- Meta’s Llama 3.1 family of models:

- Llama-3.1-Instruct-8B

- Llama-3.1-Instruct-70B

- Llama-3.1-Instruct-405B

- Mistral AI’s Mistral 7B and mixture of experts (MoE) 8x7B and 8x22B models:

- Mixtral-8x7B

- Mixtral-8x22B

- Mistral-7B

- NVIDIA Nemotron-4-340B family of models for synthetic data generation:

- Nemotron-4-340B-Instruct

- Nemotron-4-340B-Reward

- NVIDIA NeMo Retriever QA E5 Embedding v5 text embedding model:

- NV-EmbedQA-E5-v5

You can build AI agents using these microservices with the confidence that NVIDIA will secure and maintain them without breaking any application dependencies during the lifetime of that production branch.

These NIM microservices join numerous other AI libraries and frameworks already on a production branch:

- PyTorch

- TensorFlow

- RAPIDS

- NVIDIA TensorRT

- NVIDIA Triton Inference Server

- NVIDIA Morpheus

- NVIDIA Holoscan

Other AI frameworks that are new to a production branch with this release include the following:

- Deepstream for AI-based video and image understanding and multi-sensor processing

- DGL and PyG for training graph neural networks

AI for healthcare

Customers in highly regulated industries often require software to be supported for even longer periods. For these customers, NVIDIA AI Enterprise also includes long-term support branches (LTSB), which are supported with stable APIs for 3 years.

LTSB 1 coincided with the first release of NVIDIA AI Enterprise in 2021 and includes foundational AI components:

- PyTorch

- TensorFlow

- RAPIDS

- TensorRT

- Triton Inference Server

- Infrastructure software, such as vGPU driver

LTSB 2, as part of this latest release of NVIDIA AI Enterprise, adds Holoscan, which includes Holoscan SDK and Holoscan Deployment Stack.

Holoscan is the NVIDIA AI sensor processing platform that combines hardware systems for low-latency sensor and network connectivity, optimized libraries for data processing and AI, and core capabilities to run real-time streaming, imaging, and other applications. Holoscan SDK includes C++ and Python APIs to create sensor processing workflows with inherent support for sensor I/O, compute, AI inferencing, and visualization, while leveraging NVIDIA GPU acceleration.

One of the most prevalent uses of Holoscan is for medical devices, such as those for medical imaging and robotic surgery. As medical devices have strict requirements for long-term supportability, the addition of Holoscan to long-term support combined with long-life hardware enables device manufacturers to build the next generation of intelligent AI-enabled medical devices, with faster time to market and lower cost of maintenance.

The Holoscan platform with LTSB is an effective solution for other industries beyond medical devices, wherever an industrial-grade production-ready platform is needed to build AI-enabled sensor processing products.

More ways to deploy NIM microservices

NVIDIA AI Enterprise is supported o both on-premises and public cloud services. You can deploy NIM microservices and other software containers into self-managed Kubernetes running on cloud instances, but many prefer to use Kubernetes managed by the cloud provider.

Google Cloud has now integrated NVIDIA NIM into Google Kubernetes Engine to provide enterprise customers with a simplified path for deploying optimized models directly from the Google Cloud Marketplace.

Availability

The next version of NVIDIA AI Enterprise is available now. License holders can download production branch versions of most AI software containers right away but the NIM microservices are expected to be added to the production branch at the end of November. As always, you also get the benefit of enterprise support, which includes guaranteed response times and access to NVIDIA experts for timely issue resolution.