Posted by HSSL Technologies on Apr 10th 2025

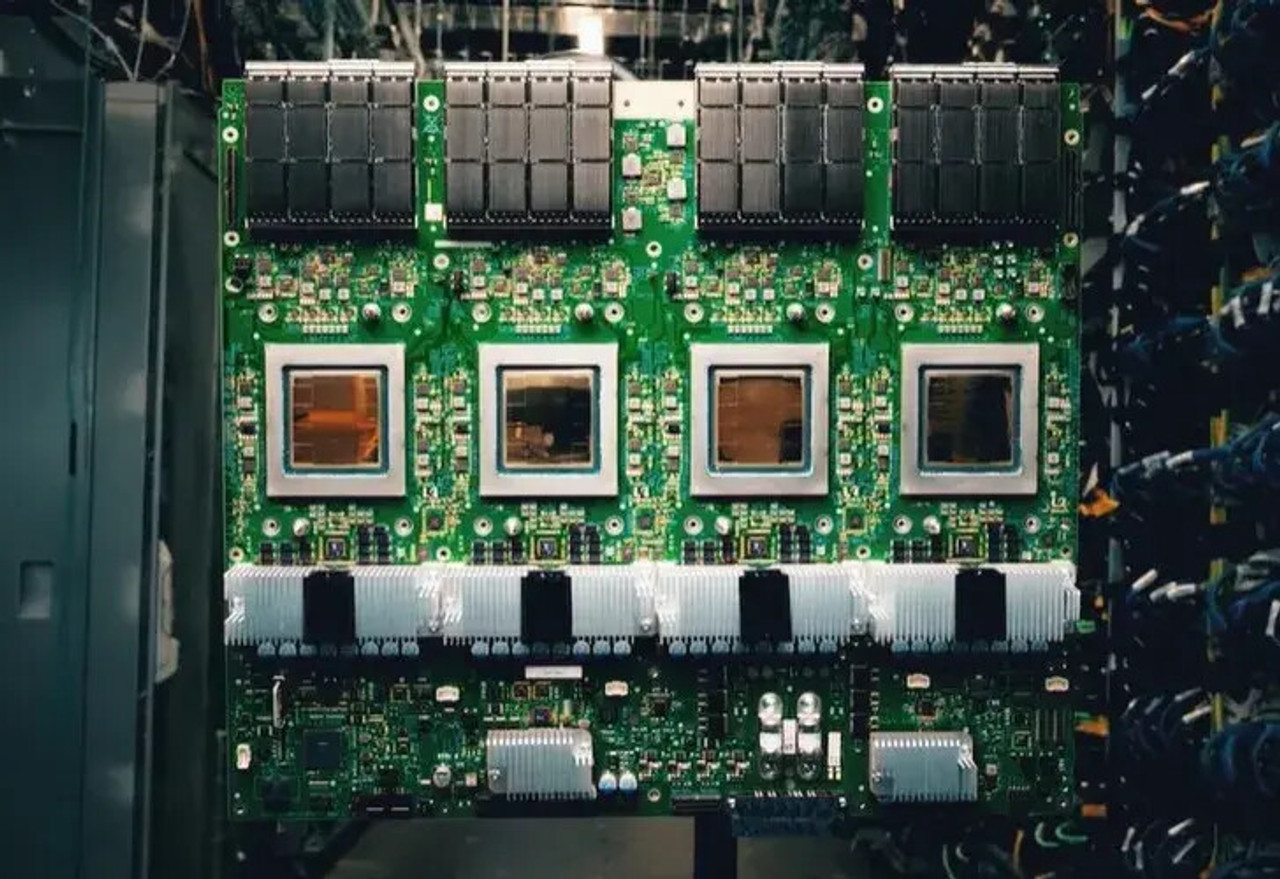

Today at Google Cloud Next ‘25, Google unveiled Ironwood — the seventh-generation Tensor Processing Unit (TPU) and the most powerful AI accelerator they've ever built. This isn’t just another hardware upgrade — Ironwood marks a massive leap forward in the way we power and scale artificial intelligence, especially for inference. That means smarter AI models that don’t just respond to prompts — they think, analyze, and proactively deliver insights.

A New Era: The Age of Inference

For over a decade, TPUs have been the engine behind Google's most intense AI workloads. Now, Ironwood is here to power the next frontier — what Google calls the “age of inference.” Instead of AI models just answering questions, we're moving into a world where they anticipate what we need, reason deeply, and generate insights on their own.

Ironwood was built specifically for this. It’s purpose-designed for running inferential models at scale — from massive LLMs (like Gemini) to Mixture of Experts and other "thinking" models that demand high-speed computation and communication.

What Makes Ironwood So Special?

At its core, Ironwood is an engineering marvel:

-

Up to 9,216 liquid-cooled chips linked by a blazing-fast Inter-Chip Interconnect (ICI) network that spans almost 10 megawatts.

-

42.5 Exaflops of compute at full scale — that’s 24 times more power than the world’s largest supercomputer, El Capitan.

-

Each chip delivers 4,614 TFLOPs of peak compute — think of that as raw horsepower for AI tasks.

This scale is part of Google Cloud’s new AI Hypercomputer architecture — combining hardware and software into a unified powerhouse for AI developers.

Built for Massive, Complex AI Models

Whether you’re training ultra-large dense LLMs or inference-heavy recommendation engines, Ironwood is ready. Key enhancements include:

-

Upgraded SparseCore: Ideal for ultra-large embeddings used in personalization, ranking, and scientific computing.

-

192GB of High Bandwidth Memory (HBM) per chip — 6x more than its predecessor, Trillium.

-

7.2 TBps of HBM bandwidth — that’s 4.5x faster access to your data.

-

1.2 Tbps bidirectional ICI bandwidth — for seamless communication between thousands of chips.

These aren’t just technical specs — they directly impact speed, reliability, and the ability to train or serve the biggest AI models in the world.

Powered by Pathways, Scaled by Design

Ironwood integrates deeply with Google’s Pathways ML runtime — built by DeepMind. Pathways lets developers scale effortlessly across tens or even hundreds of thousands of TPUs. That means you’re not just locked into one pod — you’re free to build AI systems of truly planetary scale.

Efficiency that Matters

Ironwood isn’t just faster — it’s smarter with power, too:

-

2x better performance per watt compared to Trillium.

-

30x more power efficient than the original Cloud TPU from 2018.

-

Advanced liquid cooling ensures stable, high performance even during continuous, heavy AI use.

Ready for What’s Next

Google Cloud’s experience in serving billions of users across services like Gmail, Search, and Translate feeds directly into Ironwood’s DNA. With it, developers and organizations get the tools to handle tomorrow’s AI challenges — faster, more cost-effectively, and with less energy.

Expect to see groundbreaking models like Gemini 2.5 and even AlphaFold — the AI that cracked protein folding — running on Ironwood.

And this is just the beginning.